next(generators)

Table of Contents⌗

- Introduction

- Slightly Beneath

- A yield return Duo

- Generator’s Lost Brother

- A yield yield Conundrum

- Context Managers

Introduction⌗

One of python’s best features in my opinion is generators. I believe it allows for some pretty concise and expressive code, as well as handing out an ergonomic handle to lazy evaluation when needed.

That being said, there’s a lot more to them than meets the eye and you could do a lot more with generators than iterating and collecting data.

In this article I will explore some less discussed capabilities of generators in CPython. Going from relatively basic stuff over to not-too-complicated but a bit more-complicated stuff.

You need some basic understanding of generators, maybe some decorators and higher order functions, but not something too extreme.

I will not claim any of the generator-guided approaches I’ll introduce here will be the best solution to the problem presented, but either way I hope you’ll find it entertaining/ discussion worthy.

Under the Covers⌗

Before we continue, it’s nice to know the basics of iterables, iterators, and iteration in general, over sequences and generators alike.

An

iterableis any Python object capable of returning its members one at a time, permitting it to be iterated over in a for-loop. Familiar examples of iterables include lists, tuples, and strings - any such sequence can be iterated over in a for-loop.

There are two methods an object can implement to achieve the title Iterable:

-

__getitem__: meaning it enables slicing, indexing and will raise anIndexErrorwhen you’re no longer trying to access a valid index, sometimes called a sequence. Objects that implement such behavior, to name a few, arelist,tuple,str. -

__iter__meaning you can iterate over its values one after the other. Notice this behavior can absolutely coincide with that of an object that already implements__getitem__(as every data structure mentioned above does), but not necessarily, as we see in thesetdata structure that as we know does not allow slicing nor indexing as it is by nature an unordered collection.More importantly for our purposes, this is the behavior of a

generator. We want it to be lazy, to only do what it’s supposed to do and yield what it’s supposed to yield only at each iteration, occupying an iterative nature and thus implementing an__iter__function.By definition we will not be able to tell its next-next value at a glance, we will not be able to take the second half of it without going through the first, as it hasn’t been evaluated yet. So it will not implement

__getitem__.

Let’s take a look at the following code:

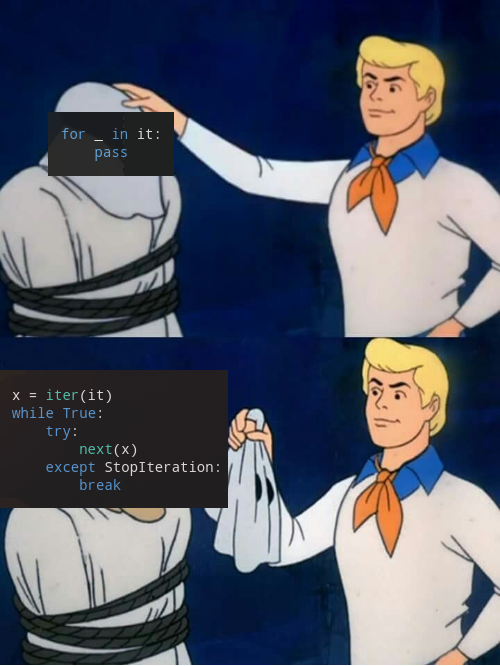

for _ in it:

pass

How the for loop knows when to stop?

Well you could say, if an indexed data structure underlies, length could be received by calling len() so it could just handle the indexing and retrieve the values, translating theoretically to something like

for i in range(len(it)): ... # do stuff with it[i]

But what about a situation where your Iterable does not implement __len__ nor __getitem__, how will you then know when to stop?

Some things to unpack here:

iter - a built-in function that accepts an object and returns a corresponding iterator object, provided by the object’s implementation of the __iter__ method.

The most important thing to notice here is of course- the StopIteration Error.

StopIteration is a built-in Exception that an iterator’s __next__ raises while trying to get the next value when there are none left.

So basically all that’s going on is that the for loop abstracts away the listening for a StopIteration error part, and gives you back values if they are indeed yielded out, and if not, it elegantly just stops, surpressing the error on its way.

side note: in latest versions of python,

StopIterationexception raised from generator code will be converted toRuntimeError. See here and in the relevant PEPs linked inside.

yield AND return?⌗

Usually we make a distinction between generator function’s and regular function’s semantics.

The distinction being yield is the keyword we use to output values back to the caller in a generator function (in a routine manner) and return to give back a value in a regular function.

So what if we use both yield and return in the same function?

The following is a property of python 3 only.

Let’s create such a function, try to catch its values and examine its behavior.

In [1]: def rgen():

...: yield "came from yield"

...: return "came from return"

Now let’s think how we can get those values.

the yielded value as usual will be obtained by exhausting the generator object.

as in next(rgen()). but what about the return value?

So if we take a look at PEP-255, it says

When a return statement is encountered, control proceeds as in any function return, executing the appropriate finally clauses (if any exist). Then a StopIteration exception is raised, signalling that the iterator is exhausted.

Ok so that’s fine, it’ll raise a StopIteration exception signaling we’re done. if that’s true then.. let’s try and catch it.

In [2]: g = rgen()

In [3]: next(g)

Which outputs, as expected:

came from yield

OK that seems alright but where’s the return value? That’s where it gets interesting-

A StopIteration attribute was added in v3.3, namely

value(source).

So our value kind of hides in the StopIteratio error, let’s see;

In [4]: try:

print(next(g))

...: except StopIteration as e:

print(e.value)

We run it and indeed the return raised a StopIteration exception which we successfully caught, printing:

came from return

So that’s neat. Notice the meaning behind the return in this context, as said in the pep: “I’m done, but I have one final useful value to return too, and this is it”, with an emphasis on I’m done, because any yield after the return statement will not be executed.

Note that this thing, generally speaking, is equivalent to doing raise StopIteration(value), so the applications are pretty similar.

If you wish to terminate a generator function early and give an indication as to what happened or some sort of a resulting value, that may be the way to go.

After all the StopIteration stuff and in a slight change of pace let’s explore something else.

Generator’s Lost Brother⌗

Introduced in PEP-342, coroutines are somewhat of an obscure feature of python, more often than not, discarded on tutorials covering generators.

In essence, a coroutine is a generator, using the syntax and nature of the generator, but kind of backwards. Instead of spitting out values, the coroutine takes them in.

Let’s see an example:

def f():

print('listening...')

x = yield

print(f"I received {x}!")

Kinda weird in a glance, what is the yield doing on a right side of an assignment?

I said a coroutine would take a value in. So what we’ll do here is send a value through the yield placing it in x. The way we’re going to do that is by the send method (provided by the beloved pep-342).

>>> x = f()

>>> next(x) # first we must advance the function to the yield statement

listening...

>>> x.send('a greeting')

I received a greeting!

# followed by a nasty StopIteration

The send method accepts the argument, sends it to the coroutine, then advances to the next yield statement. If there is none, a StopIteration error will be raised.

Side notes:

-

You could also send a value to a regular generator, but the value you get back would just be the thing it yields.

-

You may want to

.close()that coroutine after you’re done with it. -

You can throw, or rather, “inject” an exception as if it was raised inside a coroutine. Meaning we could

x.throw(RunTimeError, "Something's Gone Terribly Wrong")for example and it’d behave as if the error originated from the suspension point at the yield. -

See actual more concrete examples for coroutines in the PEP.

I will not dwell on it too much, what I do want to discuss a simple misguided idea I had on coroutines which had led me to better understand it as a whole.

A yield yield conundrum⌗

understanding by a misunderstanding⌗

When I first encountered the concept of generator-coroutine I had an idea, what if we made a function to be a coroutine and a generator at the same time?

I remember at the time I’ve read some text about the subject that mentioned something like this will lead to weird behavior, might make your mind bend (dabeaz probably? sounds like him lol) and other crazy warnings.

Perhaps I should have listened.

My idea was extremely simple: make a function what yields the (yield) such that when you send value to the function it will yield it back to you. Basically an echo generator-coroutine.

def f():

while True:

yield (yield)

note we have to put the second

yieldin brackets to let the lexer know it’s an expression (we would later send a value to) rather than just the keywordyieldwhich will kinda entail we’re trying to yield the yield keyword and result in a syntax error.

Let’s test this out:

In [7]: x = f()

In [8]: next(x)

In [9]: x.send('hello')

Out[9]: 'hello'

In [10]: x.send('hello')

In [11]: x.send('hello')

Out[11]: 'hello'

In [12]: x.send('hello')

In [13]: x.send('hello')

Out[13]: 'hello'

Seemingly it only responds to every other message we pass it. What’s going on??

In the example above I use ipython, in which if the response is None, it doesn’t display it.

So let’s just be precise– it’s not that it doesn’t respond to every other send, it’s that every other send yields back None.

Why?

So we can begin to understand by taking a look at the disassembly:

In [14]: from dis import dis

In [14]: dis(f)

3 >> 0 LOAD_CONST 0 (None)

2 YIELD_VALUE

4 YIELD_VALUE

6 POP_TOP

8 JUMP_ABSOLUTE 0

10 LOAD_CONST 0 (None)

12 RETURN_VALUE

For some reason it first loads the const None then yields stuff out.

PEP-325says: “The yield-statement will be allowed to be used on the right-hand side of an assignment; in that case it is referred to as yield-expression. The value of this yield-expression is None unless send() was called with a non-None argument.”

What’s actually going on is two things:

- The first

yieldyields None, being that the(yield)evaluates to None. - The second yield yields the value sent to the first

yield, that being our argument to thesendmethod.

To draw the two points together - the first always outputs None and receives some value. The second is always outputting the former value and ignoring input. Remember- yield always has both input & output, both of which can be None.

If you think about it, it doesn’t make sense for it to be any other way. We naively expected it to stop after the first yield, wait for us to send value to the second (yield) and echo back, but.. that’s not really reasonable considering the nature of the generator, just like the pep says “Blocks in Python are not compiled into thunks; rather, yield suspends execution of the generator’s frame.”.

Of course it wouldn’t wait for our response, it’s a generator that already took control! the second yield has to have a default value in order for the generator to yield something back to us.

A fix⌗

With the understanding we’ve acquired, can we fix it? Well yeah, we know by now a coroutine has both an input and an output. So granted we wouldn’t have a cool yield yield but by understanding that the behavior we were looking for was already deeply rooted in the coroutine, we could just do-

def f():

v = None

while True:

v = yield v

Making the yield take v as an input and an output interchangeably.

That’s the end of my tale. Thanks for coming to my ted talk.

Bonus chapter! - looking at Context Managers through generator glasses.

A Different Perspective⌗

Besides just handling iteration and spitting out values, generators have a really interesting property - they hold state. You pass control to a generator function and it “halts” until you actively advance it further.

So whenever you use a generator, there’s this two part story at play here; The first happens up until the yield, the second unfolds right after it.

How can we take advantage of this? Context Managers. It’s kind of a famous feature around the python ecosystem as it provides a somewhat elegant handle to 2-part operations (such as opening and closing a file, acquiring and releasing a lock, etc.).

Which is conceptually kind of similar to a behaviour of a generator function (for simplicity’s sake think of a function that yields only once).

In any case, such context managers can regularly be implemented as a class with the dunder methods __enter__ and __exit__.

A classic example is this: let’s say we want to create a temp directory, meaning we create a new folder, do some stuff in it, then delete it. It should look somewhat like this:

import tempfile

import shutil

class Tempdir:

def __enter__(self):

self.dirname = tempfile.mkdtemp()

return self.dirname

def __exit__(self, exc, val, tb):

shutil.rmtree(self.dirname)

At enter we do our deeds and at exit we clean up.

That’s great and all, but I probably wouldn’t have wanted to implement such class for every context manager I need, I think we could do better.

What if we could abstract out the class and provide a simpler interface for creating those sorts of context managers?

Game Plan⌗

We’re going to make an interface that wraps around a generator function in which we’re doing stuff at the entry point (could be opening a file, starting a timer, whatever), then yielding after it to mark the halfpoint, after which we merely clean up (closing something, ending a timer, etc), with as little boilerplate as possible.

In pseudo code, what we’re looking to accomplish here is an interface along the lines of;

@ContextManager

def dostuff():

start operation

yield resource # if needed, could be a handle to a file, could be some other operation

end operation

Where ContextManager is the class we’ve abstracted with which we’re able to convert a generator-function (dostuff) into a context manager.

To keep things simple, for now we’ll just make our CM print something on entry, return some value, then alert us with another print when we’re finished.

class ContextManager:

def __init__(self, func, *args, **kwargs):

self.gen = func(*args, **kwargs)

Simple enough, we got only one attribute which will be a generator-instance of the generator-function we’re wrapping.

Now let’s think about the entry point.

All we really want from it is two things-

- Advance us to the yield statement (evaluate stuff on your way there while you’re at it).

- Give us back any object that we may want to recieve from the resource in question.

def __enter__(self):

return next(self.gen, None)

This is almost too simple. Just next that generator and give us whatever comes back.

Next (no pun intended) we need to implement an exit. What we’re really waiting for now, is the well expected StopIteration exception (as again we planned one yield only).

def __exit__(self, *e):

next(self.gen, None)

Note: *e stands for various errors that for our purposes aren’t really interesting so we’re just going to blatantly pretend we didn’t see (and surpress) them all. shhh…

Why another next if there’s no more stuff to yield you ask? simply because we want to drag ourselves straight down to the end of the function.

Tired yet? well don’t be because we’re done!!

Let’s make a simple function to see that it works:

@ContextManager

def context_hello():

print("Initiating Operating..")

yield "Hello from Context Manager!!!"

print("Operation Completed!")

# And of course use it like so;

with context_hello as resource:

print(resource)

And indeed if we run the file $ python cm.py we get:

Initiating Operating

Hello from Context Manager!!!

Operation Completed

Small Caveat⌗

I know I know I said we’re done. But see now we’ve got a certain limitation; What if we wanted to pass a parameter to the context manager? Like what if say we wanted that a different kind of string would come back?

Note: It may sound silly for us to try and return our own string but think about it in a broader way; let’s say we make a file opener CM, wouldn’t we want to specify the file’s name?

So let’s think about it, how would we make or CM be open to receive an argument? We obviously can’t just

with context_hello('Custom Message') as resource:

print(resource)

right? We’d get an error-

TypeError: 'ContextManager' object is not callable

Why is that?

Well think about it, we wrapped our generator function with a class, so it’s indeed a class by now.

See it yourself by running

type(context_hello)

A class which by no means implements a __call__ method, so when we specify () after context_hello python doesn’t know what the hell to do with this call.

So what can we do?

At this point we’re way past __init__, so somehow passing the arguments to the function is pretty overdue.

Which by the way I kinda fooled you with the

*argsand**kwargsin the implementation there, we are definitely passing absolutely nothing there.

Giving arguments to pass to the function from the call to the initiallization is too confusing and backwards to even think about.

So we can do something very simple here, turn it back to a function!

def contextmanager(func):

return lambda *args, **kwargs: ContextManager(func, *args, **kwargs)

Instead of our decorator being a class, we’ll make it a function that’d receive the arguments and actually pass those to our precious __init__, after which will be

initialized straight into the generator instance by self.gen = func(*args, **kwargs).

We end up with:

class ContextManager:

def __init__(self, func, *args, **kwargs):

self.gen: Generator = func(*args, **kwargs)

def __enter__(self):

return next(self.gen)

def __exit__(self, *e):

next(self.gen, None)

def contextmanager(func):

return lambda *args, **kwargs: ContextManager(func, *args, **kwargs)

@contextmanager

def context_hello(message):

print("Initiating Operating")

yield message

print("Operation Completed")

with context_hello('Custom Message!') as resource:

print(resource)

And get our desired output:

Initiating Operating

Custom Message!

Operation Completed

And that’s about it, we succeded in creating a custom, generic and reusable Context Manager wrapper that allows us to create any kind of CM we’d like pretty easily.

Remember the TempDir class we’ve seen at the beginning of this chapter?

To leave with a good taste, let’s see an example on how to implement such tempdir context manager, but this time in yield form with our new contextmanager wrapper;

@contextmanager

def tempdir():

dirname = tempfile.mkdtemp()

yield dirname

shutil.rmtree(dirname)

It’s a nice example indeed but do take note that from version 3.2 onwards you may use tempfile.TemporaryDirectory that works as context manager out of the box.

Digressions, Clarifications⌗

- I didn’t tell you but what we’ve actually gone through here is a miniature implementation of python’s

contextlib.contextmanager. - The actual implementation is a lot more complex, it handles a lot more edge cases and exceptions, and its recommended usage is generally more cautious as well.

- I hope the simplification made it somewhat more digestible for you.

Lessons from this Chapter⌗

- We can easily make cool context managers with

@contextmanager. - And this, in my opinion, is more important - we’ve created a different kind of mechanism around generators. We’re using generators to do something very different from its ordinary use. There is no iterating over a sequence here, nor messing with concurrency, nothing like that. The generator merely acts like a mediator between entering to exiting. Which is pretty cool, or at least, educating.

To be Continued...? maybe